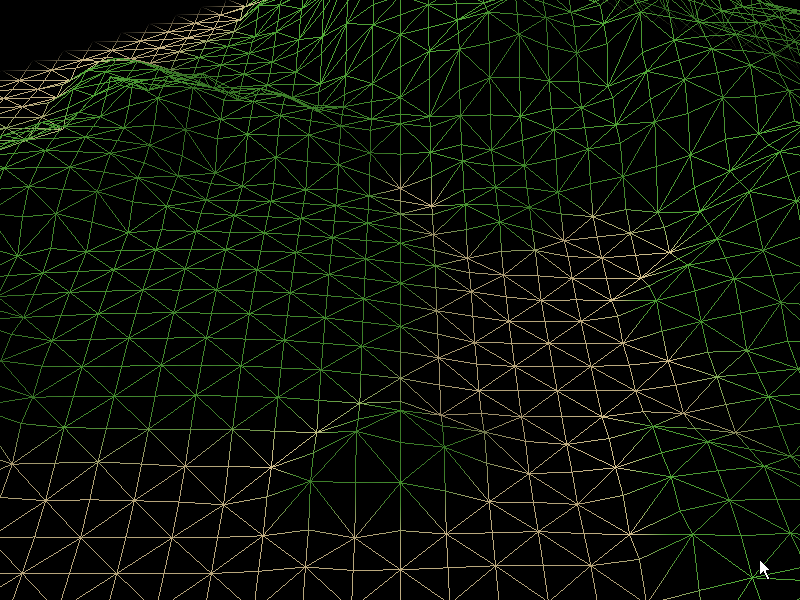

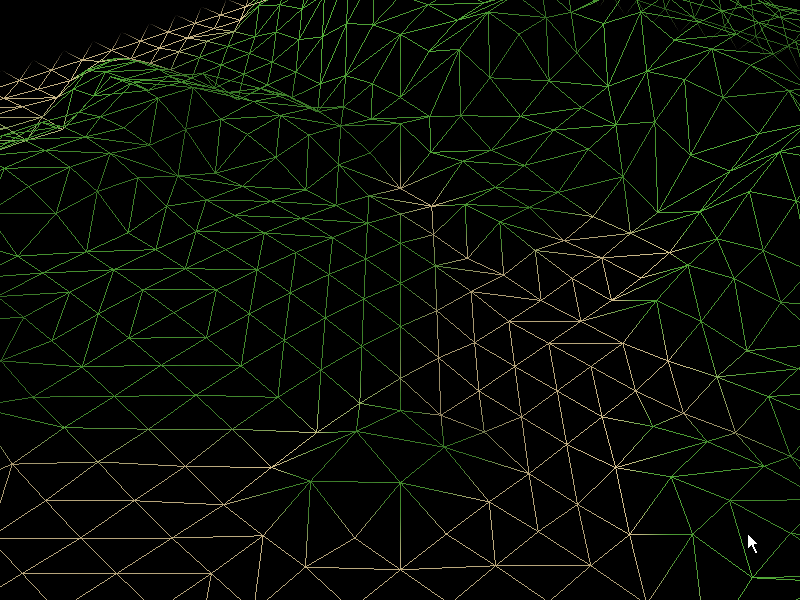

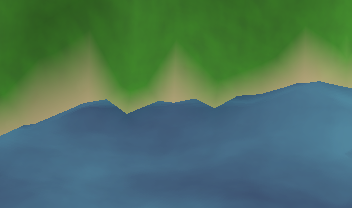

One of the reasons I haven’t been posting updates on Planitia is because I’ve had this weird graphical bug that I haven’t been able to get rid of. How bad is it? Well…here, see for yourself:

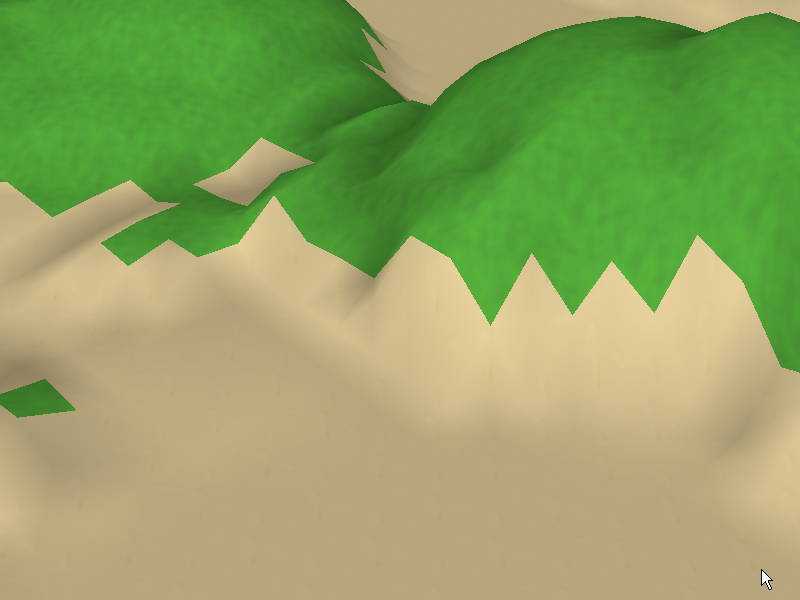

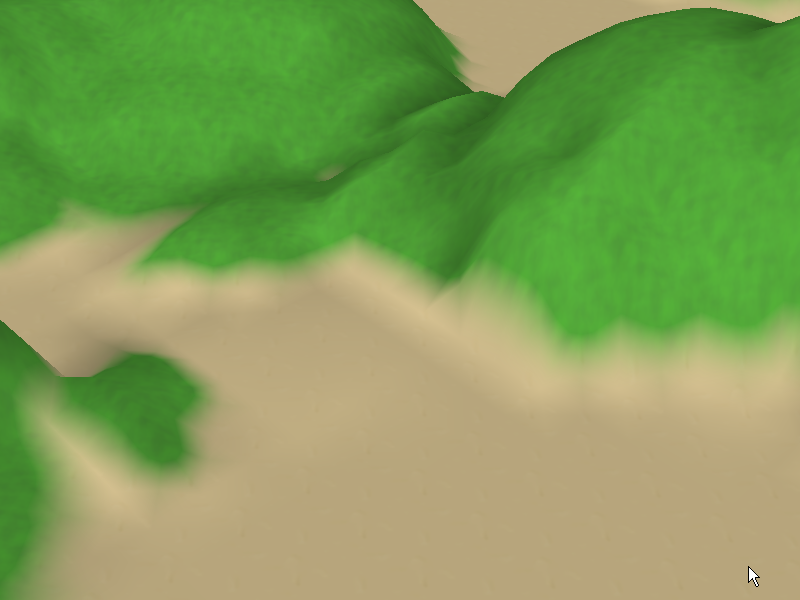

Note that some of the houses are drawing just fine, while others are drawing as green-and-brown smears. It’s not awful, but it’s like a pimple on an otherwise attractive face – it’s all you notice.

Now, it’s obvious what is happening – the houses that aren’t drawing right are losing their texture coordinates. The renderer no longer draws the entire texture over the house but just a single pixel from the texture – thus, the solid green and brown colors. This happens if all texture coordinates for the mesh are set to 0, 0.

But it’s not obvious why that’s happening. The hardest bugs to debug are the ones that only happen some of the time and DirectX’s infamous undebuggability just makes it worse. So after several evenings of playing around with DirectX’s render states to absolutely no effect I finally just gave up and moved on to other stuff. I knew I’d have to come back and fix this bug eventually and I wasn’t looking forward to it.

And this morning I decided to take another shot at it. My renderer supports two sets of texture coordinates but the second set of coordinates isn’t set on this mesh…perhaps it was picking up the second set accidentally? Let’s turn the second set off completely. Damn! That still doesn’t fix it! How about if we specify the same set of coordinates for the second set as the first? Holy smoke, that still doesn’t fix it…

Now, at work I’ve been working on my first renderer in a production environment. It’s for a Kaplan SAT program. I’m working on the PC version and I was having trouble with a cartoon shader I was writing. Searching “debug vertex shaders” brought up several recommendations to “just use PIX”.

PIX? What’s that?

It’s the official DirectX debugging tool. It’s included with the DirectX SDK. And I had no idea it existed. Mostly because nobody told me. (Baleful glare at all my programmer friends.)

With PIX I was able to figure out what was wrong with my shader at work, so I decided to use it to try to fix my bug on Planitia.

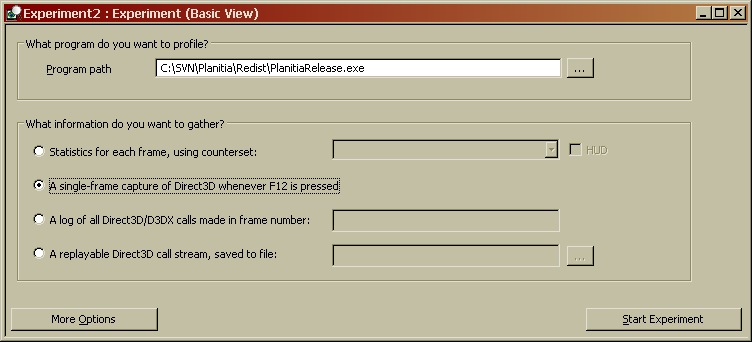

PIX is pretty easy to use. You start by creating a new experiment:

Point the Program path field to the executable you want to debug, then choose one of the four options below it. Options 1 and 4 provide the most data, but if you’re just debugging something it’s probably too much (it’s much more useful if you’re optimizing). I like option 2, where PIX takes a “snapshot” of what DirectX is doing whenever you press F12.

Click “Start Experiment” and your program will run. PIX will add some text to show you that it is functioning properly:

Now it’s running. To debug my problem I panned the camera over to a house that wasn’t drawing correctly and hit F12.

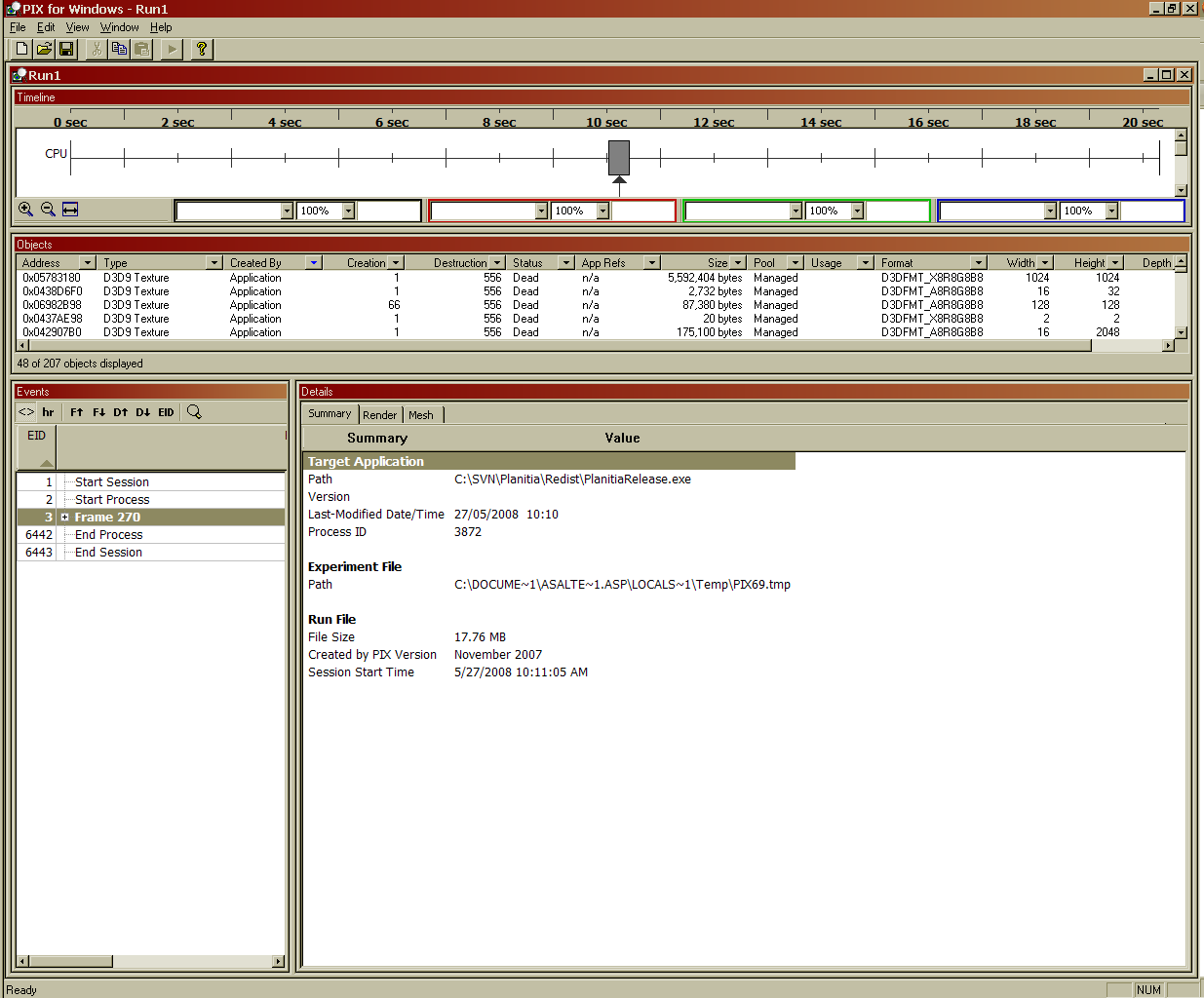

Now when I exit the program PIX brings up the results of the experiment.

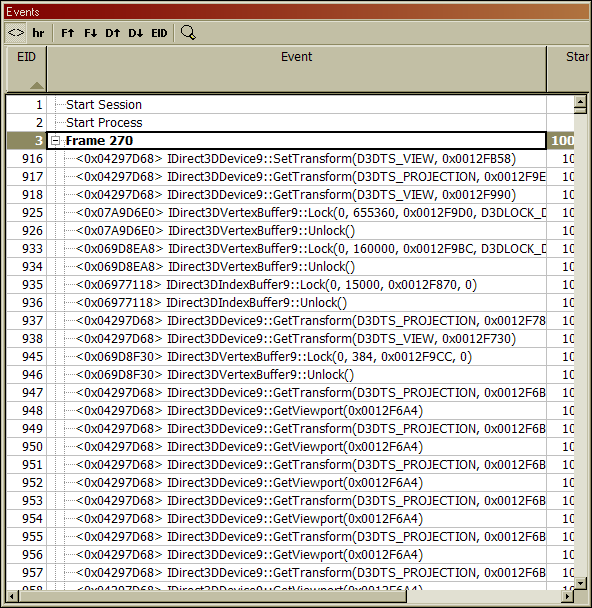

Now we’ve got a TON of information about what DirectX was doing during the frame we captured. Let’s look at the Events window…

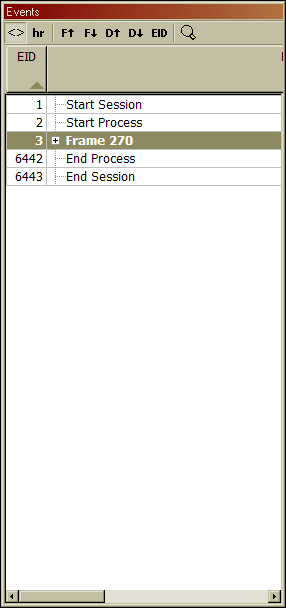

And expand Frame 270.

We now have a list of every. Single. Freakin’. Thing DirectX did during that frame. DirectX is inscrutable no more!

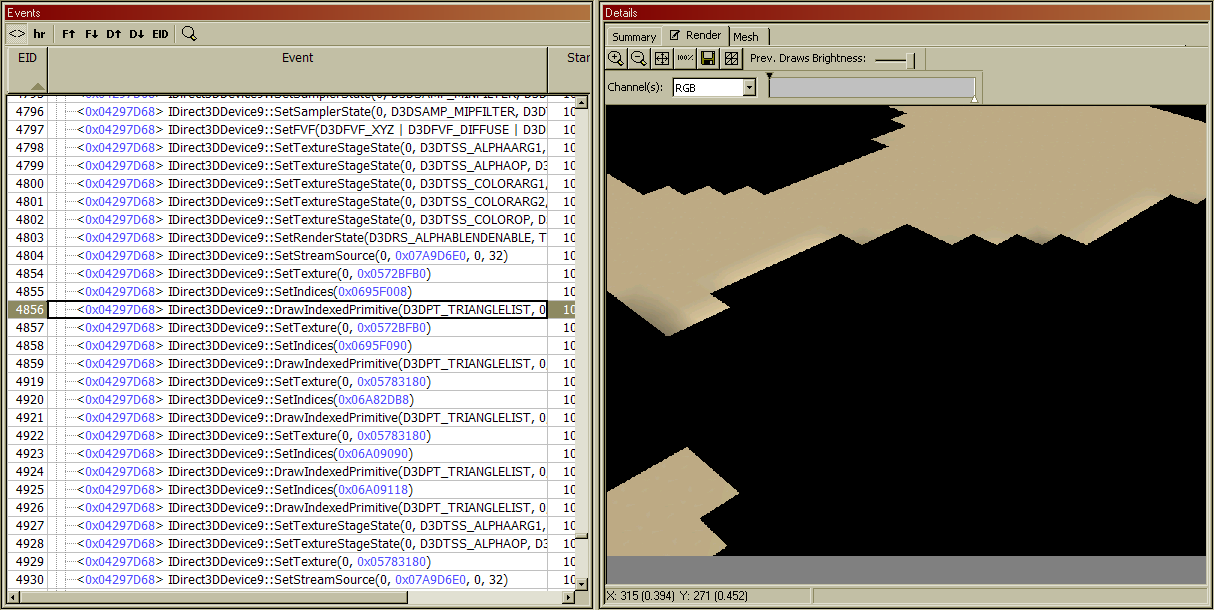

Not only do we have the list of commands, but the Details window shows exactly what that command drew:

So let’s step through the list of draw commands…ah, here’s the first house it drew. This house was drawn correctly (except that since it wasn’t on the screen, it wasn’t actually drawn at all, as shown by the Viewport window). Notice the columns that show the texture coordinates for the house.

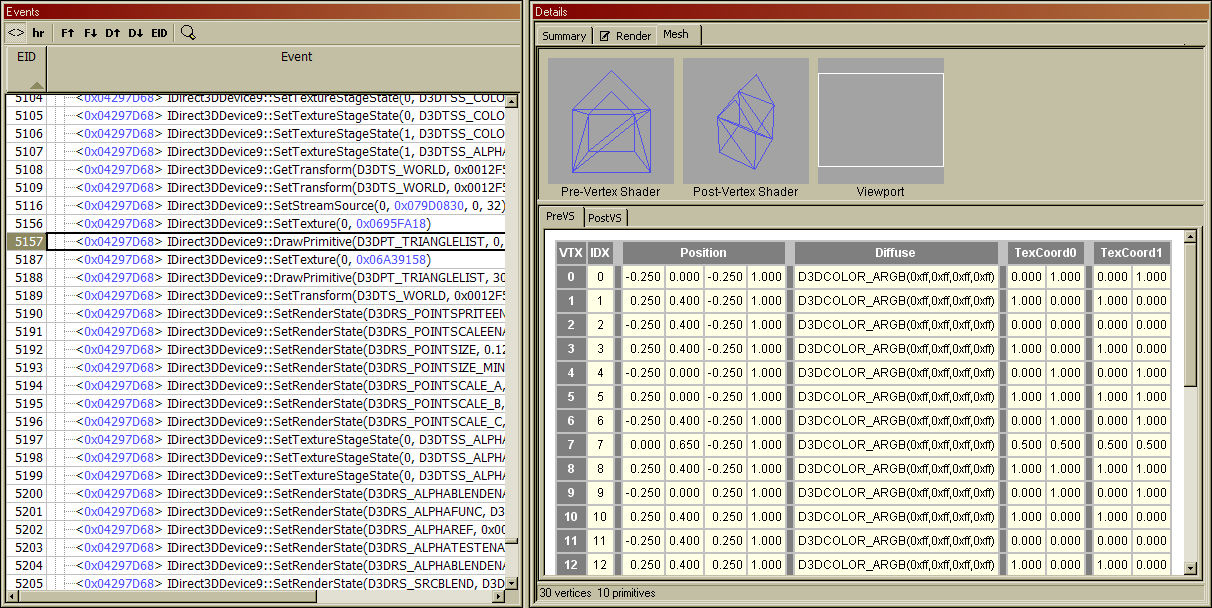

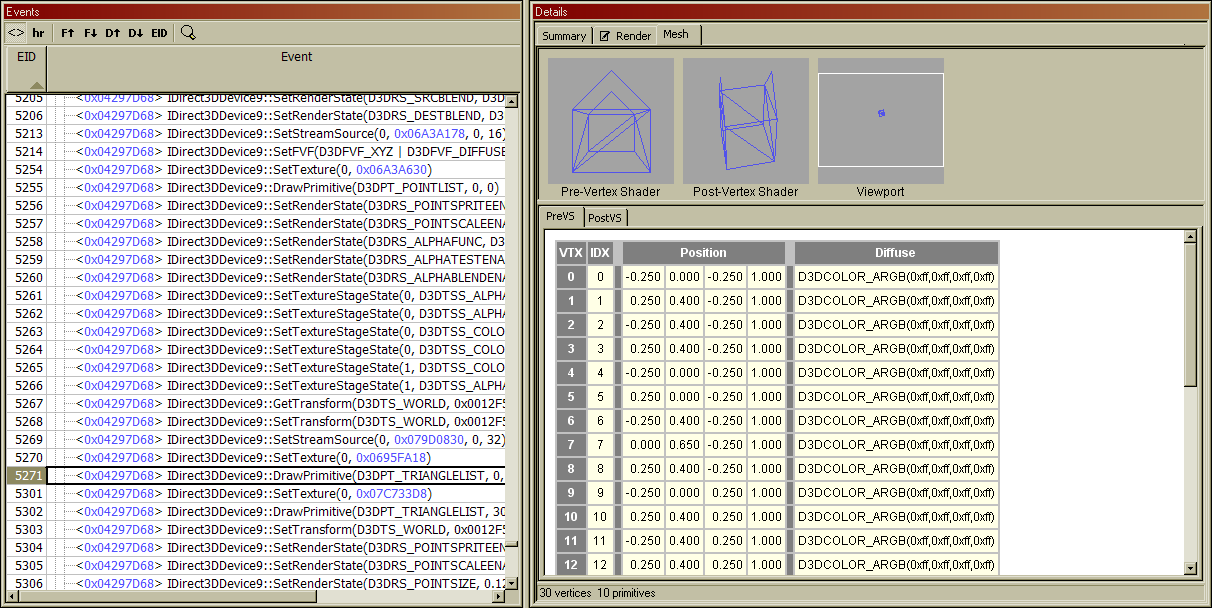

Let’s keep stepping…wait, what the hell?

A problem drawing a point sprite list? Why is it drawing a point sprite list? There aren’t any point sprites in the scene! Wait a minute, I’ll bet…

Yep. The very next thing it tries to draw is the broken house. Notice that the texture coordinates are now missing.

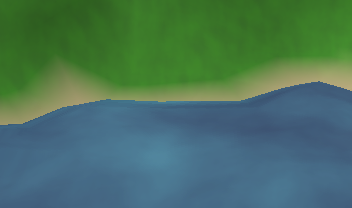

And now I know what the problem is. I was calling Draw() on a point sprite system that didn’t actually draw any point sprites. This put the renderer into “point sprite mode” – and point sprites don’t have any texture coordinates. Now, sometimes the renderer would fix itself on the next draw call – and sometimes it wouldn’t, and the house would be drawn with no texture coordinates.

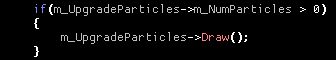

The fix: change this code:

To this code:

Time taken: ten minutes.

Minor lesson learned: I shouldn’t call DrawPrimitive() if I don’t actually have any primitives to draw.

Major lesson learned: I should use PIX – and I shouldn’t ever complain again about DirectX being undebuggable.