Warren Spector is hosting a series of master classes in game design at the University of Texas here in Austin.

Despite very short notice and a near lack of funds, I managed to squeak in. The first session was Monday night and it was with Mark LeBlanc, who is most famous for his work on the classic Blue Sky/Looking Glass games (Ultima Underworld 1 and 2, System Shock and Thief 1 and 2) and his more recent game, Oasis.

The session took place in a studio in the CMB building on the UT campus and was professionally recorded. Doubtless all the sessions will be available in some fashion after the series is over, but, having never had the opportunity to go to the GDC or any other game conference, I am very grateful for the chance to see them live.

When I got there I was surprised – for one thing, the studio wasn’t full to bursting, and for another, most of the people there were fresh-faced college students rather than the slew of industry grognards I was expecting. I found myself wondering if these kids even knew who Marc was…

The format was one I hadn’t seen before. Warren interviewed Marc for about an hour on Marc’s work history, then after a brief break Marc presented a lecture on his core design philosophies. Then Warren interviewed him again, this time asking Marc about specific games he had worked on or contributed to. The whole thing lasted about three hours and I was fascinated the whole time.

Now, I have to give Warren his props. I’d seen videos of him presenting at the GDC and he was very good there, but he also turns out to be an excellent interviewer.

But listening to Marc was a mind-expanding experience. This guy knows his stuff. You can get the gist of it by going to his blog and reading about the Eight Kinds of Fun and Mechanics, Dynamics and Aesthetics, but the real meat of his talk was how he actually applied those precepts to the design of Oasis. You can get the slides for that talk at his site as well, but it was much better live (and the ability to interact was key).

And now I’m just going to throw out random things that I remember from the talk in no particular order.

Blue Sky/Looking Glass actually started as a group of MIT students, one of whom had an uncle who was working at Origin and wanted to start his own company (Paul Neurath).

One of the really odd parallels between Blue Sky and Id Software is that at both studios all the developers started off living and working together in the same house – the Blue Sky house eventually had ten employees living in it. This both facilitated the work and kept initial production costs way down.

Warren said that when he first came to the Blue Sky house (to produce Ultima Underworld) the guys there wouldn’t talk to him until he got his laptop on the network and named it. Apparently, having a machine that you could name yourself was a big status symbol at MIT, and the idea that you weren’t “somebody” until your computer had a name carried over to Blue Sky. Warren said he named his computer “Elmer PHD” and that he uses that as his online tag now.

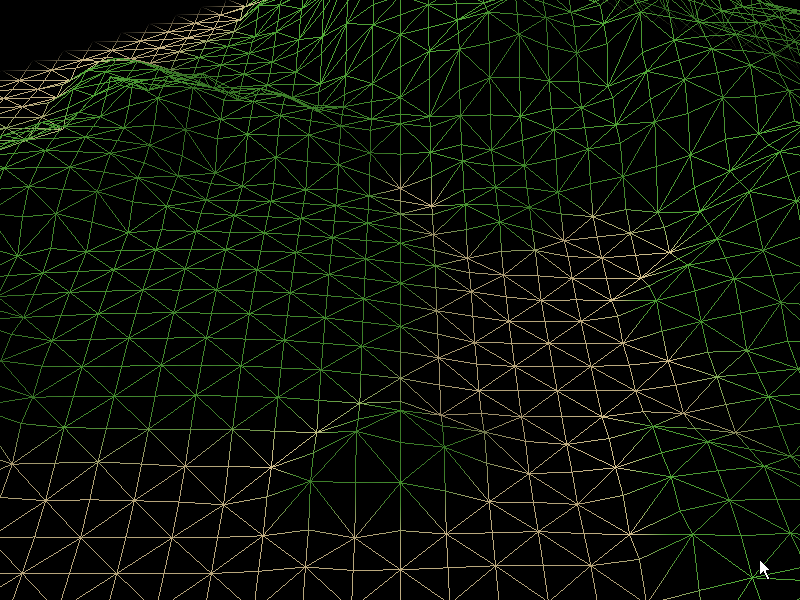

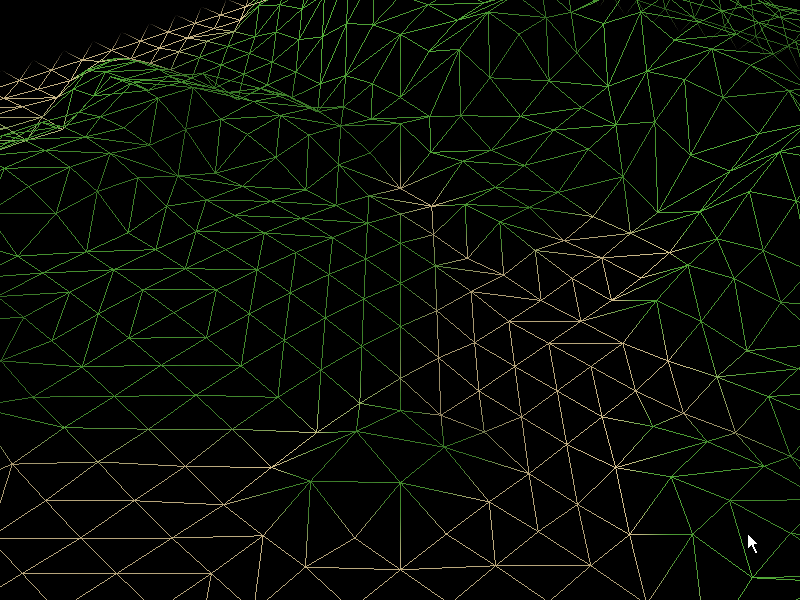

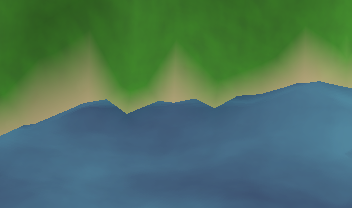

Warren said that Marc has the ability to play your game for a short time and tell you exactly what’s wrong with it and give you a whole bunch of ideas for improvement. How I wish I could have him play Planitia…

Marc finally left Blue Sky during the development of Terra Nova after he got into an argument with Dan Schmidt, the director, over a feature Marc didn’t want to implement.

Marc said that he liked the fact that his involvement with System Shock 2 was purely technical and didn’t have anything to do with the design because he could then actually play and enjoy the game!

Marc is very big on programmer/designers. He said that if you want to work at Mind Control Software, you can expect to get grilled on game design even if you’re interviewing for an art position. Warren chimed in and said that they do the same thing at Junction Point. Marc also mentioned that at Valve, there are no game designers – they have “gameplay programmers” instead. This neatly coincides with my two favorite game postmortems.

After it was all over I went over, shook his hand and thanked him for the Looking Glass stuff. He said, “Hey, I was just on the team.” I said, “Well, you’re the member of the team who is here, so I’m thanking you.” He didn’t seem to mind that.

Frankly I think the whole thing was good enough to put on TV, and I’m hoping that’s where it will end up. Looking forward to next Monday’s session, which will be with Mike Morhaime, one of the founders of Blizzard.